- Sections

- Ruby

- Web Development

- Artificial Intelligence

- Urban Planning

- Astronomy

- Issue Navigation

- Previous Issue

- Next Issue

Thursday, May 08, 2025

The Digital Press

All the Bits Fit to Print

Thursday, May 08, 2025

All the Bits Fit to Print

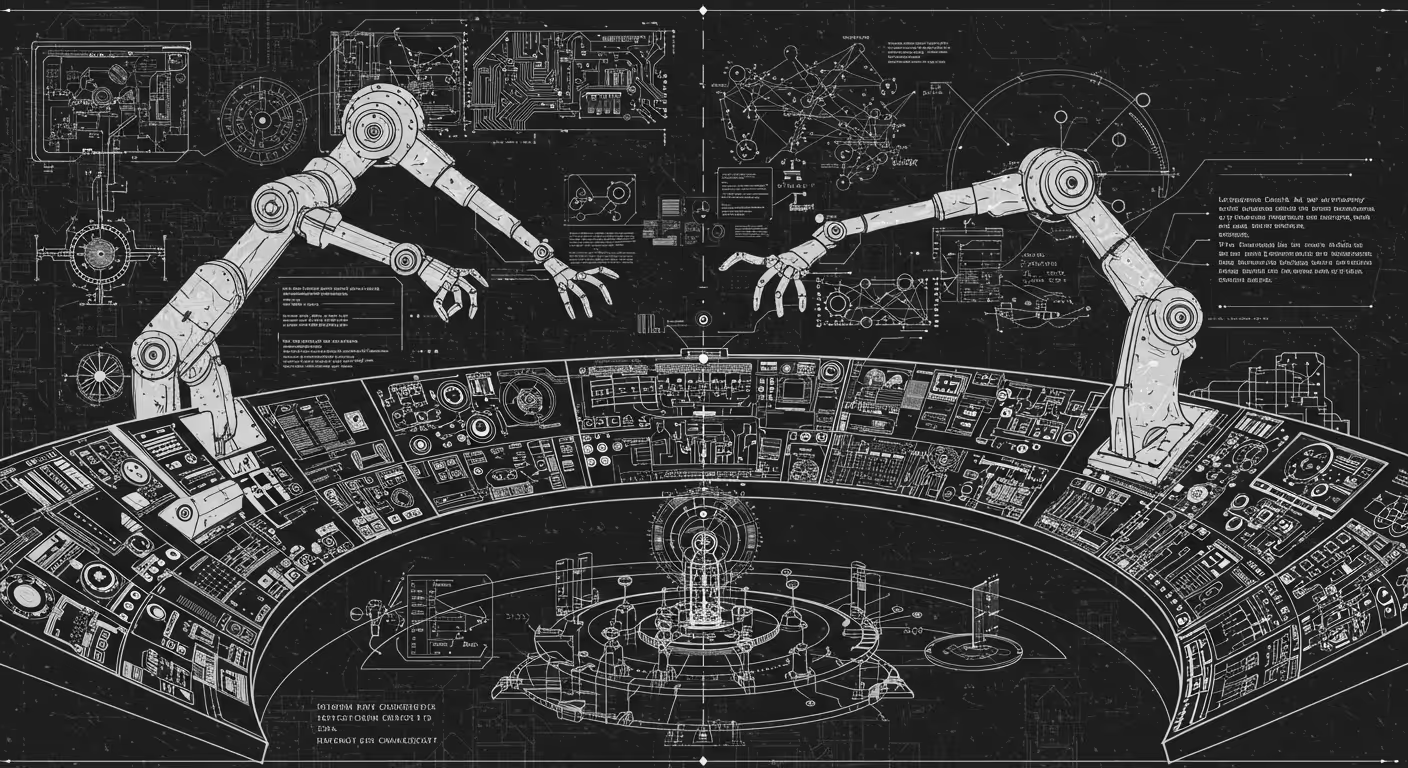

Strategic scenarios and research priorities for preventing AI-related risks

Experts warn that advancing AI could soon surpass human intelligence, posing catastrophic risks including human extinction without proper control and governance.

Why it matters: Uncontrolled AI development risks misuse, war, authoritarianism, and potentially human extinction.

The big picture: Four geopolitical AI futures include global AI restrictions, US-led AI race, light regulation, and sabotage deterrence.

The stakes: Only international cooperation with an “Off Switch” and coordinated halt can likely prevent catastrophic AI outcomes.

Quick takeaway: Urgent research and policy action is needed to build governance, halt dangerous AI, and enable global agreements.