- Sections

- Ruby

- Web Development

- Artificial Intelligence

- Urban Planning

- Astronomy

- Issue Navigation

- Previous Issue

- Next Issue

Tuesday, May 27, 2025

The Digital Press

All the Bits Fit to Print

Tuesday, May 27, 2025

All the Bits Fit to Print

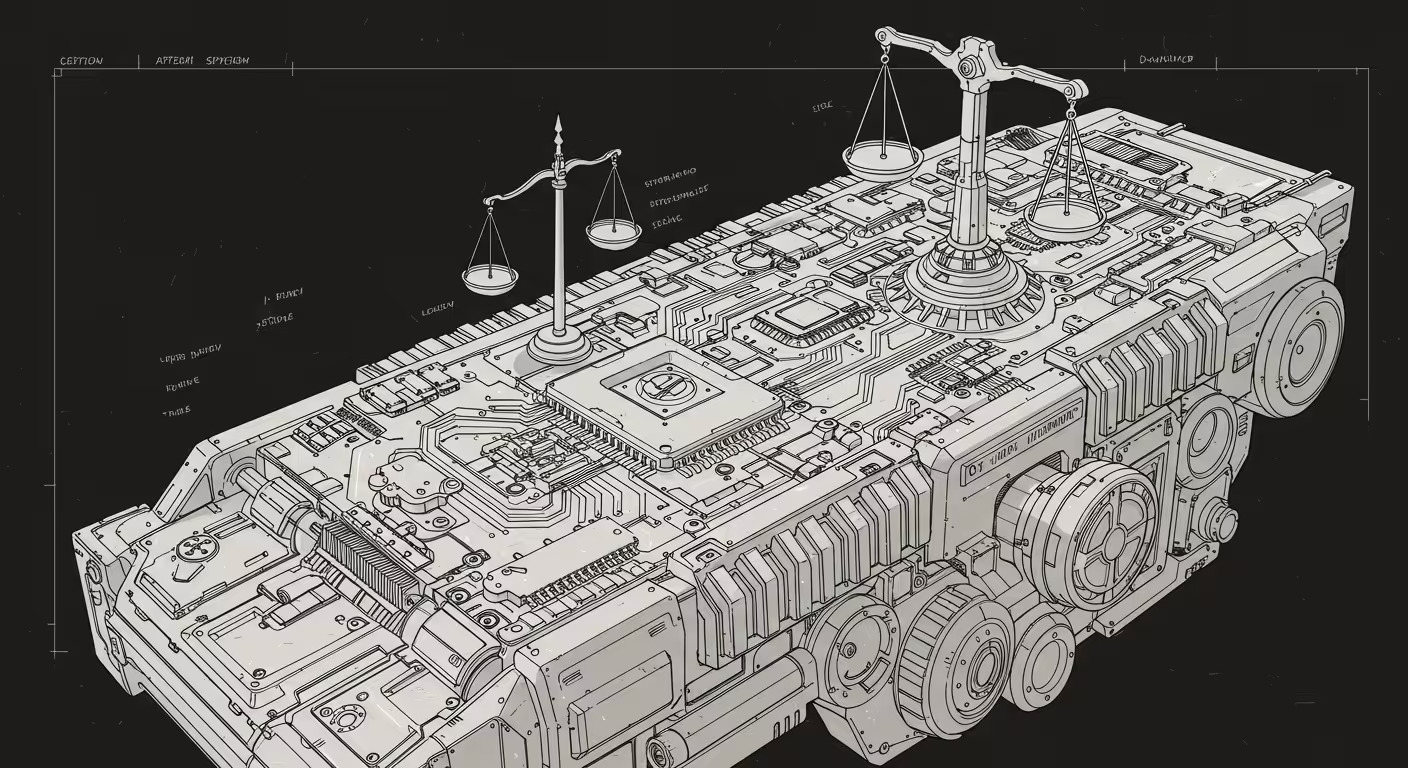

Proposing technically-grounded regulation for AI in military systems

Artificial intelligence is increasingly integrated into lethal autonomous weapon systems, raising new risks that affect military effectiveness and ethical warfare norms. This paper highlights the need for technically grounded regulations and active involvement of AI researchers in policymaking.

Why it matters: AI-LAWS pose unique risks like unintended escalation, unreliable behavior, and reduced human control in combat.

The big picture: Current policies fail to differentiate AI-driven lethal systems from conventional ones, risking inadequate regulation.

Stunning stat: AI-LAWS can unpredictably fail in unfamiliar environments, undermining military effectiveness and stability.

Quick takeaway: Defining AI-LAWS by their AI behaviors enables clearer regulation and calls for AI researchers' involvement in policy.